Node JS Streams: Everything You Need to Know

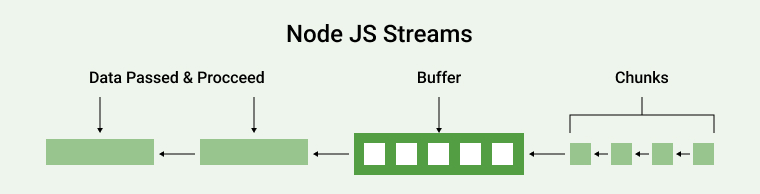

Streams are known as the bridge between the place where the application’s data is stored and where it must be processed. This means that streams are a concept that is used to read the data and write the data continuously to its destination. This is a very popular approach as it enables the Node js developers to handle large data in their client’s applications. When you hire Node js Developers in India, use this approach while working with technologies like Node.js to offer the best results to their clients and help them move from the traditional techniques that read or write data which required more storage space as it gathered all the data after reading it before processing.

Node.js streams enable the web application to read an entire file in chunks and process it quickly. It not only offers memory efficiency but also helps applications perform better. To know more about streams in Node.js, have a look at its types and benefits which makes Node.js app development companies use it for the majority of their applications, let’s go through this blog.

1. What are Node JS Streams?

In software development, streams are known as abstract interfaces that enable the developers to work with data that can be easily written and read. Node.js stream is just the approach that is used by the web app developers to handle data flow between input and output sources.

Node js streams are very much crucial as they enable the developers to efficiently handle data for big web applications. And when the developers are using the streams process, it enables them to load data in chunks instead of loading it all at the same time in the memory. In this way, the data of any application can be streamlined from its source to the destination in real time without facing any issues like data loss or buffering.

1.1 The Stream Module

In Node.js app development, stream module is an approach that is known as the core module which enables the programmers with the information that can help time in handling the streaming data of any application. Besides this, the stream module also has the capability to offer a set of APIs that can help in developing, writing data, and reading streams in the system.

1.2 Node Js Streaming API

In Node.js, streaming API is a set of APIs that gives developers access to different approaches which can be taken into consideration when it comes to handling streaming data in Node.js. Basically, the Stream API is something that enables developers to create, read, and write streams for functions and classes in Node.js web applications. Here are some of the main components that are available in Node.js Stream API:

- Stream Classes: When it comes to Stream API in Node.js, it offers various different classes that can be used while working with Node js streams like Transform, Readable, Writable, and Duplex classes.

- Stream Events: Events are also offered by the Stream API that can be used by the developers while creating applications. Some of the events that can be given off include error, data, finish, and end. And these are the events that are mostly used in order to take care of various aspects of stream processing.

- Stream Methods: While working with stream API, the developers can get easy access to various methods like the onData() method which can be used to handle data sent, and the pipe() method which can be used for connecting both writable and readable types.

- Stream Options: Another important component of stream API is the different options that it offers that can be helpful in configuring the streams such as setting the watermark or setting the encoding for streams.

2. Types of Node.js Streams

Now, after understanding what Node.js streams are, we will go through the different types of Node.js streams. Here are the four fundamental stream types in Node.js –

2.1 Readable Stream

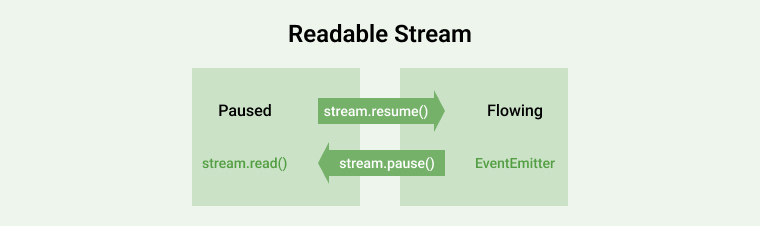

One of the most popular types of Node.js streams is Readable streams. It is a type of stream that can be mainly used by developers when they want the system to read data from a source. The source here can be anything from a network socket to a file. Readable streams will allow you to emit a ‘data’ event when new data is present and it can also help to ‘end’ events as soon as the stream ends. Let’s see how Readable streams in Node js works:

For instance, when it comes to using readable streams in Node.js, developers can use ‘fs.createReadStream()’ when they want to read the files and when they want to read the HTTP requests that are sent to the application, they can use ‘http.IncomingMessage’. To understand this more clearly, here is a practical example of a readable stream in Node.js.

const fs = require('fs'); // Readable Stream Creation from File const readableStream = fs.createReadStream(‘test.txt’, { encoding: 'utf8' }); // 'data' events handling readableStream.on('data', (chunk) => { console.log(`Received a chunk of ${chunk.length} bytes of data.`); }); // 'end' event handling readableStream.on('end', () => { console.log(‘Reached the end of the file…!’'); }); // Errors handling readableStream.on('error', (err) => { console.error(`Error Occurred: ${err}`); }); |

Here is the output of the above code :

Data Reading from chunk: My name is ABC

Readable Stream Ended. |

In the above-given example, the developers have used the fs module in order to create a readable stream from a file which is named test.txt’. Here, in order to read this file as a string, the developer has set the encoding option to ‘utf8’. Besides this, the ‘data’ event is then emitted with the use of a stream which is called every time a data chunk is read from the file. And the ‘end’ event is also emitted which is called when the file has reached its end. After which the errors are logged to the console by the streams.

2.2 Writable Streams

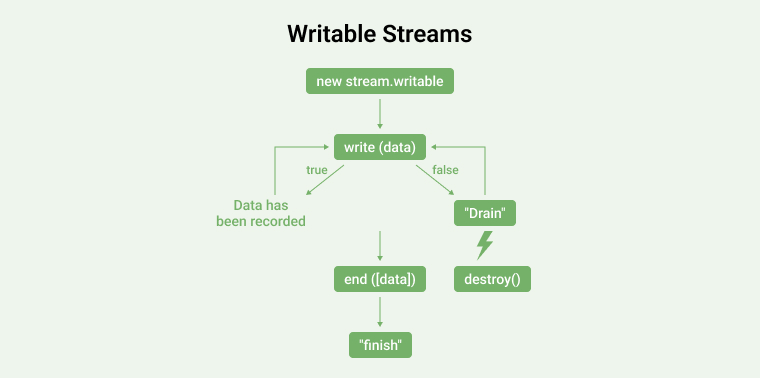

Another popular type of Node js stream is the Writable stream. It is a stream in Node.js that can be used by developers when they want to write data to a destination stream like a network socket or a file. Writable streams contain a ‘write()’ method which is used to write data in the application and an ‘end()’ method which gives a signal when the stream ends. The below flowchart depicts the working of Writable Stream in Node js.

For instance, in Node.js when developers want to work with writable streams, they use ‘fs.createWriteStream()’ to write a file and ‘http.ServerResponse’ to write HTTP responses. Here is the practical example of the NodeJs Writable Stream for better understanding.

const fs = require('fs'); // writableStream stream creation const writableStream = fs.createWriteStream('result.txt'); // Writing data in file writableStream.write('Hello from writable stream') // writableStream end writableStream.end(); // Handling stream events writableStream.on('finish', () => { console.log(`Writable Stream Ended!`); }) writableStream.on('error', (error) => { console.error(`Error Occurred: ${error}`); }) |

Here is the output of the above code :

Writable Stream Ended! |

In the above example, the developers have used the fs module in order to create a writable stream for the file known as ‘result.txt’. Here, to read the data from the file ‘result.txt’ as a string, the developer has set the encoding option to ‘utf8’. After that, the data has been written into the stream with the use of the write() method and it has been called twice as two lines of text have been written. And then, the stream has been ended by using the end() method. Besides this, the ‘finish’ event is emitted which gets called when the system writes the data into the file. At the end, errors are logged into the console log by the stream.

2.3 Duplex Streams

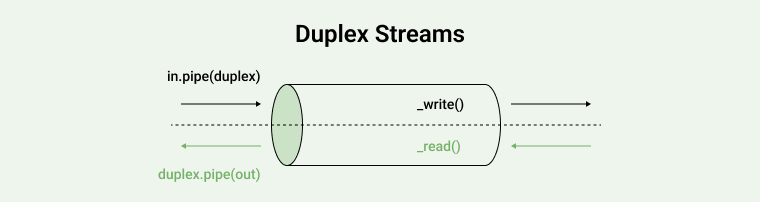

After understanding readable and writable streams, it’s time to jump to duplex streams. Duplex streams is a well-known type of Node.js stream that is used for bidirectional approaches. This means that duplex streams can be used to read and write the data into the system.

This stream type can be used for tasks like proxying data from one file or network socket to another. Besides this, it inherits from both writable and readable streams which means that it holds both the methods in it. Here is an example of the duplex stream.

const { Duplex } = require('stream'); const duplex = new Duplex({ write(chunk, encoding, callback) { console.log(chunk.toString()); callback(); }, read(size) { if (this.currentCharCode > 100) { this.push(null); return; } this.push(String.fromCharCode(this.currentCharCode++)); } }); duplex.currentCharCode = 75; process.stdin.pipe(duplex).pipe(process.stdout); |

In the above example, the developer has created a new Duplex stream with the use of the Duplex class. Then the write method is called when any new information is written to the duplex stream. The read method is also called whenever the duplex stream reads the data from a file. Later, the standard input stream has been piped to the duplex stream, and then the duplex stream has been piped to the standard output stream. By following this approach, the system allows the users to type input which can be written to the duplex stream and offer output.

2.4 Transform Streams

The last most popular type of Node.js stream in our list is the Transform streams. It is a type of duplex stream that can be used to modify the data in the application as soon as it passes through the streams. Transform streams can also be used for encryption, compression, and data validation.

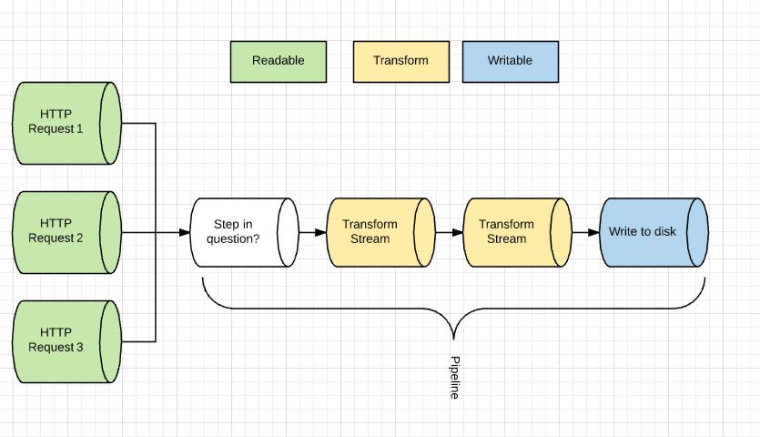

As shown in the above image, Transform is a stream that inherits from Duplex which means that it also has both read and write methods. In this type of stream, when the developer writes the data in order to transform the stream, it will be transformed by using the transform function before offering an output. Here is an example of the same :

const fs = require('fs'); // Importing stream APIs const { Transform, pipeline } = require('stream'); //Readable stream Creation const readStream = fs.createReadStream('inputfile.txt'); // Writable stream Creation const writeStream = fs.createWriteStream('outputfile.txt'); // Set the encoding to be utf8. readStream.setEncoding('utf8'); // Transforming chunk into uppercase const uppercaseWord = new Transform({ transform(chunk, encoding, callback) { console.log(`Data to be processed: ${chunk}`); callback(null, chunk.toString().toUpperCase()); } }); readStream .pipe(uppercaseWord) .pipe(writeStream) pipeline(readStream, uppercaseWord, writeStream, (error) => { if (error) { console.error(`Error occurred during stream transforming : ${error}`); } else { console.log('Pipeline execution completed successfully !'); } }); // Stream Events Handling readStream.on('end', () => { console.log(`Readable Stream Ended!`); writeStream.end(); }) readStream.on('error', (error) => { console.error(`Readable Stream Ended with an error: ${error}`); }) writeStream.on('finish', () => { console.log(`Writable Stream Finished!`); }) writeStream.on('error', (error) => { console.error(`Writable Stream error: ${error}`); }) |

Here is the output of the above code :

Data to be Processed: My name is ABC ReadableStream Ended! WritableStream Finished! Pipeline execution completed successfully! |

As seen in the above code, the developer has created new classes to extend the built-in transform class from the module. And the name of the new class is UpperCaseTransform. Here, the ‘ _transform’ method is used to convert each chunk of data that comes in into uppercase with the use of the toUpperCase method. After that, the transformed data is pushed using the push method, and the callback function is called to give a sign that the process is successful.

3. When to Use Node.js streams

After understanding the concept of Node.js streams and their types, one question that generally arises in the mind of programmers is when they can make use of Node.js Streams. Generally, the streams come in handy when any developer is working with files that are big and might take a lot of time to read. For instance, Node.js streams can be really useful when the developer is working on an application like Netflix which is a streaming application that will require transferring of data into small chunks to offer the users high-quality streaming and can also avoid network latency.

Here is the process that can be used while utilizing the Node.js streams.

3.1 The Batching Process

When it comes to Node.js streams, the batching process is one of the most common patterns that can be used by the developers for optimizing the application’s data which involves collecting data in chunks, sorting it out, and writing it down once all the data is stored. Below is the code that shows how the batching process works.

const fs = require("fs"); const https = require("https"); const fileUrl= "demo URL"; https.get(fileUrl, (response) => { const chunks = []; response .on("data", (data) => chunks.push(data)) .on("end", () => fs.writeFile("writablefile.txt", Buffer.concat(chunks), (err) => { err ? console.error(err) : console.log("File saved successfully!"); }) ); }); |

In the above code, all the data of the file is pushed into an array which means that when the data event and the end event will be triggered when the data is received. For this, the developers have written the data into the file by using the fs.writeFile and Buffer.concat methods.

3.2 Composing Streams in Node.js

In Node.js, the fs module is used by the developer to expose some of the native Node Stream API. And this can be used to compose streams.

4. Piping in Node JS Streams

Piping the Node.js streams is a very crucial approach that developers use when they want to connect multiple streams and this method is widely popular as it comes in handy when the Node js programmer wants to break the complex process of any application into smaller tasks. For this, Node.js offers a native pipe method –

fileStream.pipe(uppercase).pipe(transformedData); |

5. Advantages of Node Js Streaming

At last, after knowing almost everything about Node.js Streams in this blog, we will go through some of the major advantages of Node.js streaming which makes companies like Netflix, Walmart, Uber, and NASA use it to manage their applications. Here are some of the advantages which make everyone use Node Streams for their applications.

- Flexibility: Node.js Streams can be used by the development teams to handle a wide range of data files, HTTP requests & responses, and network sockets. This means that it is a versatile approach that can be used for applications that require high memory usage.

- Performance: As you know that while working with the Node.js streams, the data can be processed in chunks which means that it can be transferred quickly. This not only makes the reading and writing process efficient but also enables the applications to perform in real time.

- Modularity: Node.js Streams can be easily piped and combined together which means that it enables the processing of complex data by breaking it into smaller parts which also makes it easy for the developers to read and write the code.

6. Conclusion

As seen in this blog, Node.js Streams are very important when it comes to managing data for large applications. Unlike traditional methods, it enables developers to create real-time applications that can perform very well by avoiding memory leaks. Node.js Streams can make data handling an easy task which means that any type of application that is built using this approach can easily and smoothly function. Though it is a bit of a tough concept to learn, once the Node.js developers master it, Streams can be very beneficial.

7. FAQs

7.1 What are streams in node JS?

In Node.js, data is handled using a stream, an abstract interface. To build applications that use the stream API, you can use the node:stream module. Node.js offers a wide variety of stream objects. For example, consider a query sent to and handled by an HTTP server and process.stdout are both types of streams.

7.2 What is stream module?

The STREAMS module is a predetermined collection of low-level functions and properties. Data that travels through a module undergoes “black-box” processing.

7.3 What is the work of streams?

In Node.js, there are 4 different kinds of streams.

- Writable: We can use these streams to store information.

- Readable: The information in these streams is readable.

- Duplex: Both writable and readable streams are considered in Duplex.

- Transform: Modifiable and transformative streams that write and read data.

7.4 What is stream in API?

In Java 8, the Collections API now also includes the Stream API. A stream is a representation of a collection that may perform operations on its members sequentially, such as filtering, sorting, locating, and collecting.

Hardik Dhanani has a strong technical proficiency and domain expertise which comes by managing multiple development projects of clients from different demographics. Hardik helps clients gain added-advantage over compliance and technological trends. He is one of the core members of the technical analysis team.

Developers use Node.js, one of the most popular JavaScript runtime environments, just like the V8 engine used by browsers like Google Chrome. This platform allows...

Nov 1, 2023

Nov 1, 2023

Comments

Leave a message...